Deepfakes

- Greg Robison

- Mar 18, 2024

- 10 min read

Updated: Dec 10, 2024

THE FACE OF DECEPTION & THE EROSION OF TRUST

As a consequence of [deepfakes], even truth will not be believed. The man in front of the tank at Tiananmen Square moved the world. Nixon on the phone cost him his presidency. Images of horror from concentration camps finally moved us into action. If the notion of … believing what you see is under attack, that is a huge problem. - Nasir Memon, professor of computer science and engineering at New York University

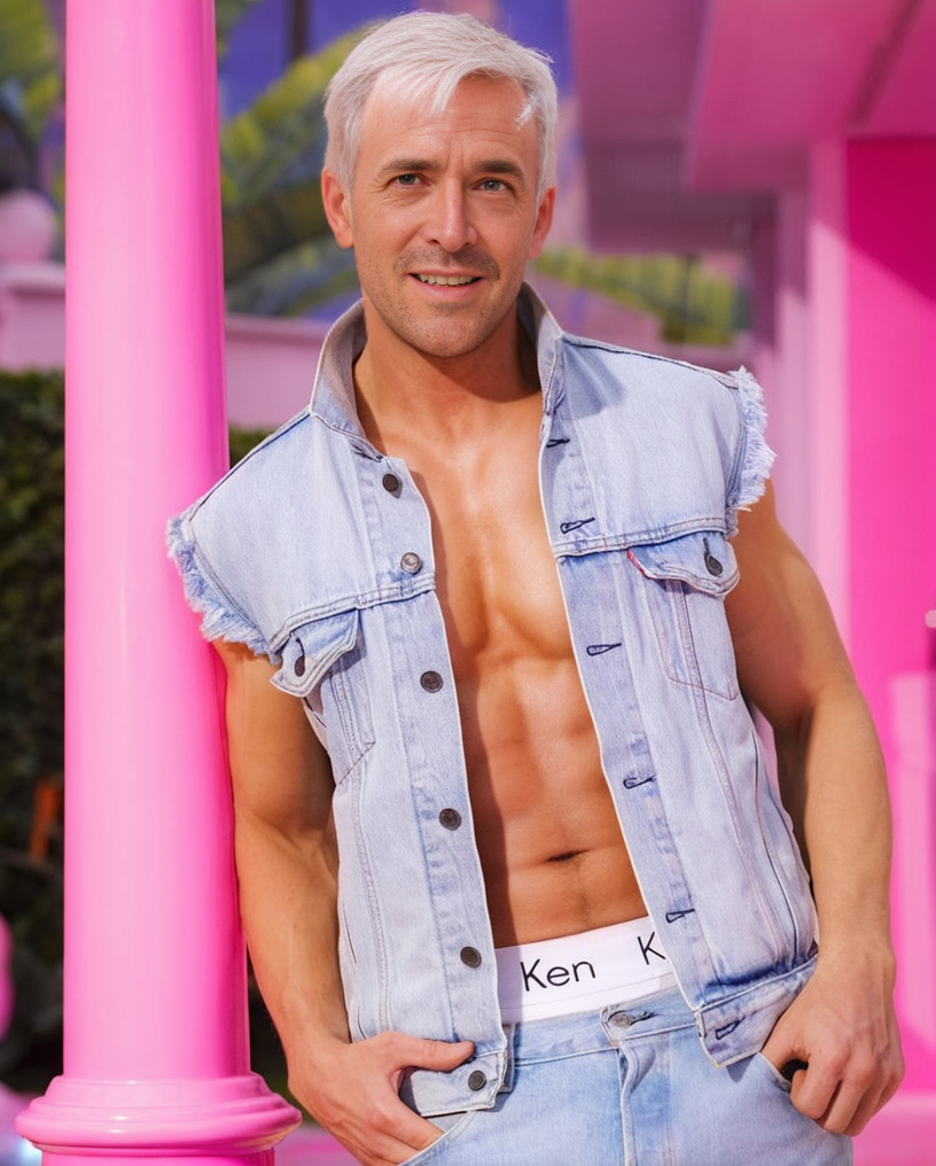

(Note: Stephen Bohnet has agreed to the usage of his likeness throughout this post.)

INTRODUCTION

With recent advances in artificial intelligence (AI), deepfake technology has been taken to another level of believability. Deepfakes are synthetic images, videos or audio clips in which a person's likeness is replaced with someone else's, created using advanced machine learning techniques. A recent deepfake controversy with Taylor Swift brought the technology to the attention of the general public and raised questions about its place in our society. Originally designed and used to create realistic and engaging content, deepfake technology has quickly demonstrated its potential to influence public opinion, reshape entertainment, and even disrupt democratic processes by fabricating convincing falsehoods. Its rapid evolution is a significant milestone in the development of AI, showcasing the technology's ability to mimic human faces and movements to a believable degree.

Deepfakes are incredibly powerful because they exploit the deep-seated trust we place in our own eyes. “Seeing is believing”, and videos have long been considered reliable records of reality. Deepfakes hijack our faith in photorealism – they can mimic the subtle facial expressions, lip movements, vocal intonations, and other nuances that we intuitively rely on to recognize a person. This ability allows deepfakes to preserve the style and mannerisms of the individuals being simulated, increasing believability. Unlike photoshopped images, quality deepfakes don't trigger any uncanny sense of wrongness - they feel real in a way that can easily override our rational skepticism. Deepfakes have the unique power to trick our brains and make even the most extraordinary scenes seem plausible.

Caught in the middle of the latest deepfake controversy are open-source tools. On the one hand, open-source software democratizes access to powerful AI tools, fostering innovation and creativity. On the other hand, it also makes it easier for bad actors to create deceptive content which challenges our already-fragile ability to discern truth from fabrication. This situation highlights the balance between promoting technological innovation while also safeguarding against its potential misuse. Now that these tools are widely available, we need to find effective strategies for regulation and enforcement that can unleash the power of open source on AI while mitigating risks, so our technology is a positive for society.

UNDERSTANDING DEEPFAKE TECHNOLOGY

Deepfakes have been around since we could draw – the tools have just gotten better over time and until recently, Photoshop was the tool of choice for putting someone else’s face into an image. Now, AI tools are the most powerful for deepfakes. Deepfakes are created with generative adversarial networks (GANs), where two AI models work in tandem: one generates images, while the other evaluates their authenticity. By iterating back and forth between the two models, the system progressively refines its output, blurring the lines between reality and fabrication. Some diffusion image generation models, which can create nearly any image from text prompts, have additional face-swap capabilities that can make deepfakes incredibly easily. The software first recognizes faces in the image, then replaces the detected face with the target image, ensuring that the swapped face aligns with the original in terms of pose, expression, and lighting for a nearly seamless result.

In the case of GANs and face-swap technology, AI does all the heavy lifting making the process quick and easy, even enabling creation of convincing videos. As AI research has developed new neural network architecture, created vast datasets of peoples’ faces and increased computational power are available to train the models, the sophistication of deepfakes has improved greatly and will continue to improve. Being able to recognize and replicate the nuances of human facial expression and voices will make it more and more challenging to distinguish between genuine and fabricated content.

And now with open-source AI tools and websites, anyone with just a little technical expertise can create their own deepfakes. The fun uses are trying to balance against the potentially devastating effects of misinformation, political manipulation and/or violations of personal privacy. There is another necessary balance between innovation and safeguarding our individual rights to our own likenesses. There have been benign uses like images of Pope Francis in a puffy coat and more contentious ones like video of Mark Zuckerberg giving a sinister speech. As deepfake technology improves, the stakes get even higher, including identity theft, financial heists, and sexual harassment. We need to reevaluate our legal infrastructure and position about deepfake technology in light of these concerns. Unfortunately, the open-source software community is at the center of the controversy.

THE OPEN-SOURCE PARADIGM

Open-source software is freely available to the public, allowing anyone to view, modify, or distribute the software as they see fit. Much of our modern technical infrastructure relies on open-source software, including Linux, Android, Firefox, Python, Apache Server, MySQL, etc. This model of software distribution is founded on principles of collaboration, transparency, and community-driven development. This collective expertise is the engine that drives innovation, pulling expertise from around the world. Closed-source software, on the other hand, hides its code and restricts its usage. Innovations in cloud computing, IoT, blockchain, machine learning, web development and operating systems are largely built with open-source tools.

"Fake Fake Baby"

Many deepfake tools are open source and freely available on the internet meaning the barrier to entry is a computer, a couple of images, and a tutorial. You don’t need extensive computing resources or proprietary technology - this democratization of AI technology fosters innovation and educational opportunities. But it also raises opportunities for misuse, highlighting the need for ethical considerations and potential regulatory measures. And because the open-source community is so resourceful, these projects will advance deepfake technology, making it even harder to detect yet easier to make. Here is a simple YouTube tutorial you can follow for easy-to-make deepfakes: https://youtu.be/ooaulo-zujg

ETHICAL AND SOCIETAL IMPLICATIONS

So now that anyone can create convincing deepfakes, the implications extend into the realms of privacy invasion, misinformation and beyond. The ability to create indistinguishable replicas of real people saying or doing things they never did poses a significant threat to personal privacy and the integrity of information. The potential for harm is not hypothetical; children are creating deepfake porn of their classmates and people are fabricating political speeches, undermining public trust in media. The ease of deepfake creation magnifies these concerns, as it allows for the rapid spread of falsehoods on social media with little recourse for those affected or punishment for those creating the images. It is already ruining people’s lives.

We are already living in an age with so much misinformation, the effects of which can be dramatic. With upcoming US elections in 2024, a deepfake video of a public figure making false claims can spread virally before verification, causing public confusion, affecting stock prices, or even inciting violence. According to the Center for Countering Digital Hate, the volume of AI-generated disinformation related to elections has been rising by 130% per month on X over the past year and Samsub found a 10x increase in deepfakes from 2022 to 2023. We need a comprehensive response now that includes technological solutions like watermarking and detection tools, legal frameworks and public awareness campaigns to mitigate the risks associated with deepfake technology. We need to balance technological innovation and ethical responsibility, with both technological tools for detecting deepfakes and a public discussion about the topic.

THE CHALLENGE OF REGULATION AND ENFORCEMENT

Laws should prohibit non-consensual porn, fraud, harassment, defamation, etc. committed by deepfakes, but the tech should remain legal for benign purposes. Unfortunately, the current legal landscape concerning deepfakes is a patchwork of laws across various jurisdictions. In the United States, the National Defense Authorization Act mandates the Department of Homeland Security to issue annual reports on the potential harms of deepfakes and explore detection and mitigation solutions. Additionally, the Identifying Outputs of Generative Adversarial Networks Act focuses on researching deepfake technology and developing standards for authenticity measures. States like Texas, Virginia, and California have taken steps to address specific issues related to deepfakes. Texas has banned deepfakes that aim to influence elections, Virginia targets deepfake pornography, and California prohibits doctored media of politicians close to elections. However, the legal discussion around deepfakes intersects with the First Amendment rights in the U.S., where the line between protecting individuals and preserving freedom of expression becomes blurred. Restrictions on deepfakes might be seen as impinging on free speech unless they are defamatory or violate copyright laws.

The EU is trying to be proactive in regulating deepfakes, with proposals requiring the removal of deepfakes from social media platforms and fines for non-compliance. The Digital Services Act and the proposed EU AI Act include measures for monitoring digital platforms and transparency requirements for deepfake providers. The variance in laws at the state, country, and global levels means that enforcement is complex at best and nonexistent at worst. And the speed of innovation often outpaces the development of legal and regulatory frameworks, meaning we’re always playing catch up.

BALANCING INNOVATION WITH RESPONSIBILITY

One can make a compelling argument that open-source technology preserves real innovation. It democratizes technology, allowing widespread access to advanced tools and fostering collaboration. It’s been important in developing deepfake technology, allowing for creative and benevolent uses, such as in filmmaking, education, even restoring historical footage. However, with great power comes great responsibility. Responsibility to ensure these powerful tools are not used for harm. The ethical use of these tools hinges on a community-driven approach to governance and self-regulation. Users should disclose when deepfakes are used - clear disclosure about synthetic media (including in metadata) can help build trust among the public. Developers and users must navigate the thin line between innovation and ethical misuse, encouraging a community where technology serves our society and minimizes the potential harm.

The open-source community has typically balanced innovation and responsibility through licensing, codes of conduct, and community standards. Some open-source projects who have developed deepfake generators also develop detection tools, ensuring that advances in creation are matched by advancements in recognition and mitigation. Developments in digital watermarking, using the blockchain for content authenticity verification, and advanced detection algorithms that might identify inconsistencies that may be barely perceptible. Continually training models on deepfake datasets can improve detection accuracy and help mitigate the spread of deepfakes. Finally, the community should also encourage a culture of accountability where developers and users establish norms and guidelines that prioritize ethical usage.

Beyond regulation, education and public awareness play a necessary role in combating deepfake misinformation. People are getting concerned – almost two thirds of adults think AI will increase the spread of misinformation for the 2024 elections. Everyone needs to know about the nature of deepfakes, the potential harm they can cause, and how to critically evaluate images/videos/voice recordings for authenticity. It will require governmental initiatives and funding, education in schools, and outreach by media organizations who are actively using the technology. We will need collaboration between technologists, policymakers, educators, and the public around the development and enforcement of clear legal standards about the creation and distribution of deepfakes, encouraging the ethical use of the technology. The better we can navigate this tricky space together, the better we can ensure its positive effects and develop trust.

TEN TIPS FOR SPOTTING DEEPFAKES

To help educate the public on how to spot deepfakes, here are some telltale signs of a digitally altered or wholly-digitally created image or video:

Facial Inconsistencies: Look for irregularities in facial features. Deepfakes may have issues with the alignment of eyes, ears, or other facial features. The face might appear to be slightly off-center or asymmetrical. Hairlines may not look natural, especially around ears.

Unnatural Blinking: In early deepfakes, the subjects often had a lack of natural blinking or eye movement. Although newer deepfakes have improved on this, inconsistencies in blinking or eye movement can still be a giveaway.

Poor Lip Sync: Check if the lip movements are perfectly synchronized with the audio. In many deepfakes, the mouth and lips do not match up seamlessly with the spoken words.

Inconsistent Lighting or Shadows: Analyze the lighting and shadows on the face and background. If they seem inconsistent—like a light source affecting the face differently from the rest of the scene—that could indicate manipulation.

Skin Texture: Deepfakes often struggle to replicate skin texture accurately. The skin may appear too smooth, waxy, or uniformly textured. Pay attention to the pores, wrinkles, and other natural skin features as well as consistency throughout a video.

Unusual Hair: Hair can be challenging to render realistically. Look for signs of blurriness, edges that blend unnaturally with the background, or hair that moves in an unrealistic manner.

Background Anomalies: Often, the focus of a deepfake is on the face, leading to less attention on the background. Look for strange artifacts, inconsistencies, or a lack of detail in the background.

Compression Artifacts: Deepfake videos might display unusual compression artifacts, especially around the face or when the face is moving. These could manifest as blocks of distorted pixels or odd color shifts.

Audio Inconsistencies: Listen for any discrepancies in the voice. The tone, pitch, or cadence might be off, or the voice might not sound natural or consistent with the person's known voice.

Contextual Clues: Finally, consider the context of the video. If it shows a well-known person acting out of character or in an unexpected setting, or if the source of the video is dubious, these could be clues that the video is a deepfake.

THE FUTURE LANDSCAPE OF DEEPFAKES

Given today’s capabilities, there is still room for improvement in the production of convincing deepfakes. We will see technological advances, and unless we address the problem, we will see more misuse, casting a greater shadow over any usage. Not only will we see advances in AI that will make deepfakes more sophisticated, but we will also see the technology available to more and more people. Hopefully this access will create new uses in entertainment, education, etc., bringing historical people to life for history lessons, allowing novice creators to make convincing special effects, or even potential therapeutic benefits for those suffering from dementia. We will have to navigate the ethical boundaries of finding beneficial uses without compromising our individual rights or societal values.

It’s all our responsibility to shape the future of deepfakes, through both innovation and responsible usage. The discussion about deepfakes reflects our values as a society, our rights to privacy and our pursuit of truth in the age of digital manipulation. As we have these discussions, the collective responsibility in shaping the future of deepfakes cannot be overstated. It involves a collaborative effort among technologists, legislators, educators, and the public to foster an environment where innovation thrives but is tethered to ethical standards and transparent practices. How we navigate this path will define not just the future of deepfake technology but the very basis of digital trust and authenticity for generations to come.

CONCLUSION

Deepfakes aren’t going away anytime soon, the cat’s long left the bag. We need to highlight beneficial and creative uses to counter malicious ones. We need to disclose the usage of synthetic media. We need better deepfake detectors. We need better education about how to spot deepfakes. We need to focus on mitigating harm via regulation. Making sure that the evolution of deepfake technology is guided by strong ethical concerns is necessary to harness its potential for good while safeguarding individual privacy, maintaining public trust, and preserving the integrity of our information. Only with collective dialogue and action can we strike the right balance of innovation and regulation where technology amplifies human creativity and potential.